Top SharePoint Blogs

SharePoint Code Check (SPCop) first impressions

SharePoint Code Check (SPCop) which is the free SharePoint code analysis component of SharePoint Code Analysis Framework (SPCAF) was released in the first week of October 2013.

I had a quick test-drive and this is what I found..

Historically I used a combination of FXCop , CAT.NET , StyleCop and SPDisposeCheck to ensure my custom code meets industry standards and best practices for SharePoint development.

As a SharePoint Architect I am constantly looking for ways to make it easier for our team to build reliable, robust and quality code. Code analysis tools are essential in our development lifecycle and I also find that a set of analysis tools is a very valuable training mechanism for ASP.Net developers to make the transition to SharePoint development.

SPCop is a great tool for ensuring the highest possible level of quality code is produced as it consolidates the functions of many of the individual code analysis tools into a single tool. It also allows the developer to analyse the XML code in SharePoint packages like Features, ContentTypes, ListTemplates and all the other files like controls (.ascx), pages (.aspx) and master pages (.master), stylsheets (.css), JavaScript (.js) etc.

The great thing is that SPCop can be used directly in Visual Studio 2010/2012/2013 which eliminates the need for the developer to first compile assemblies and run command-line tools to analyse the code. The results of the code analysis are displayed in the Visual Studio Error List which makes it quite convenient for the developer to navigate and repair issues.

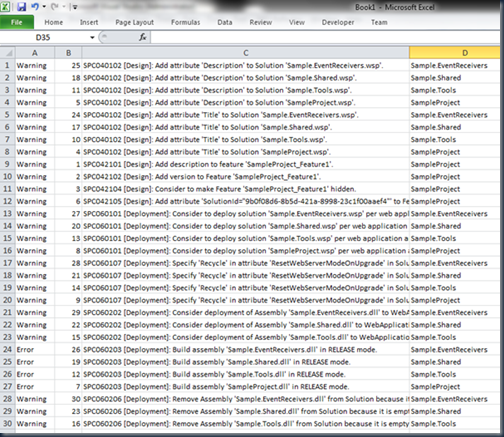

As an example I used Visual Studio 2010 to create a C# SharePoint 2010 Empty SharePoint Project and I added a Feature with a Feature Event Receiver. I did not add any code to my project. Because I haven’t written any code yet I would trust that the (almost empty) project meets the appropriate standards but when I ran SPCop I was quite surprised as to how many valid warnings I received. (Imagine how much this would help a new SharePoint developer)

The results are shown below:

This means that, even before I start writing custom code I can analyse the configuration of my project to ensure everything is in place.

The normal behaviour in Visual Studio is that if a developer double-click on an error or warning in the Errors List, the code window will open at the location where the issue need to be addressed. What I like about SPCop is that because it is designed specifically for SharePoint it also has the ability to navigate the user into some of the SharePoint configuration windows. As example, in order to fix the deployment scope, the user is immediately presented with the Package editor window. This is something which I haven’t seen in other SharePoint tools and it is very helpful.

One of the Visual Studio solutions which I am currently working on contains 16 Visual Studio SharePoint projects. There are times when I want to run SPCop for only a specific project but there is also the need for me to run SPCop on the entire solution and consolidate the results of the code analysis of the 16 projects into a single MS Excel report.

To run SPCop against a particular project all you have to do is to right click on the project (in Solution Explorer) and select “Run SharePoint Code Check”

To run SPCop against all projects in a solution, simply right click on the solution instead of a project.

The output can then be copied from the Errors List into Excel (for analytics, reporting, etc.)

When I speak to development teams about code analysis tools and ALM, one of the first questions I always get asked is whether it is easy to customize the rule sets (the rules which the code analysis is based on). Face it, no development house is the same and no project is the same, so there will always be a need to make small modifications to the rule sets.

In Solution Explorer (in Visual Studio), right click on your SharePoint project or solution and select the SPCop Ruleset command. This will allow you to select a pre-defined rule set, modify an existing rule set or create a new custom rule set.

The SPCAF Ruleset Editor is used to modify existing or create new rule sets.

I appreciate the Schema and Help URLs which are available for the existing rules and actually think I want to encourage my fellow developers to use these as a study guide..whooohoo!!!

Overall I am immensely impressed with this (free) tool and I have no doubt that this tool will become the core of my code quality assurance.

Technological advancements, lack of experience, moving into the SharePoint development realm (from traditional ASP.Net), etc. are only some of the challenges we face. This along with deadline pressures, demanding projects, complex work situations, etc. make it very difficult to excel and obtain a sense of achievement so encouragement, resources, learning materials and great tools are essential for us to be successful.

Having the right tools at hand makes an enormous difference in the life of a SharePoint developer and I am grateful for Matthias Einig and the RENCORE AB team for making SPCOP available. Thanks guys!!

Related - please see my blog post: The reason why Microsoft.Office.Server.Search.dll prevented me from using SPCOP to analyse code.

Why Microsoft.Office.Server.Search.dll prevented me from using SPCop to analyse code.

I recently evaluated SharePoint Code Check (SPCop) and I quickly came across a problem whereby I was unable to run SharePoint Code Checks on certain projects.

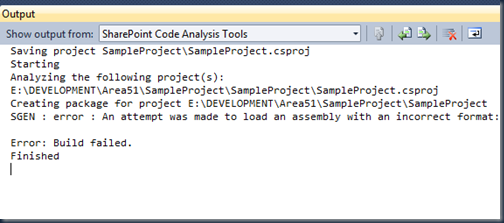

The problem was that SPCop would initiate the build but then, before the code analysis started, the following error would be shown in the Visual Studio Output window:

SGEN : error : An attempt was made to load an assembly with an incorrect format: C:\Program Files\Common Files\Microsoft Shared\Web Server Extensions\14\ISAPI\Microsoft.Office.Server.Search.dll.

The good news is after a bit of investigation I found that the issue has absolutely nothing to do with SPCop and it is actually quite an old SGEN and SharePoint 2010 custom development problem, so if you come across the same problem, don’t associate it to the new release of SPCop … I know it is easy for a developer to download software, install it and then consider immediate problems as something which was caused by misconfiguration or that there might be a known issue with the new release..

This problem is not because of SPCop!!

This post will explain the detail of the issue and how to work around it.

Scenario:

I have a Visual Studio 2010 SharePoint project which contains:

• a reference to Microsoft.Office.Server.Search.dll and

• a web service reference

(in this example a reference to SharePoint Authentication service at http://SiteCollectionURL/_vti_bin/Authentication.asmx)

I am busy with development and I can successfully build my project in debug mode.

I then try to run SPCop.. Right click on project, select SharePoint Code Check and then click on “Run SharePoint Code Check”

SPCop starts to create a new build but then fails and I am unable to analyse my code :-(

Why?

Before SPCop analyses your code it builds and packages the SharePoint project to create the .wsp file which it then inspects. During the build process SGEN actually fails. It is not SPCop which fails.

I was able to build and package my project in debug mode so why is SPCop unable to build the same code?

After a bit of investigation I realized that SPCop will build the project in RELASE mode even though my project configuration is set to DEBUG mode. This is actually great because before I finalize my code I need to build and test it in release mode anyway.

Why did SGEN fail?

If your project contains a reference to Microsoft.Office.Server.Search.dll and a reference to a web service SGEN will fail to generate the serialization classes.

This is due to Microsoft.Office.Server.Search.dll not being a MSIL dll but instead being specifically compiled to run on AMD64. I guess this means that SGEN is unable to reflect on it and build Serialization classes.

If you set your project to release mode and go to your project properties page, you will most likely notice in the Output section the setting for “Generate Serialization Assembly” is set to “Auto”.

The interesting thing is that when the setting is on Auto the following applies:

• When build in debug mode: Generate Serialization Assembly = OFF

• When build in release mode: Generate Serialization Assembly = ON

So, this explains why we can build in debug mode but not in release mode. And, because SPCOP builds in release mode it explains why you will receive the same SGEN error.

Proper Solution

I haven’t found a solution for building projects which contains both a reference to Microsoft.Office.Server.Search.dll and a web service reference in release mode. I assume that because of this issue one would build in debug mode and then use SGEN.exe to create the serialization assembly and then include the assembly in your package.

There is a Work Around

The work around is to set "Generate Serialization Assembly" to "Off" on the project properties for the release build configuration.

Once you changed this setting and saved the project you will be able to successfully analyse your code with SPCop

My concern is that if we set "Generate Serialization Assembly" to off, XmlSerializer will generate serialization code and a serialization assembly for each type when the SharePoint web app runs for the first time (example, after server reboot or IIS reset). Because this happens only once it is not a huge performance issue, but nonetheless, I would still like to find a proper solution.

Enjoy!!

Solved: “The collection cannot be modified” error on Content Type Update.

I recently came across a problem whereby an exception is thrown when Update() is called on a SharePoint 2010 content type.

Let me explain the scenario… there are two ways to retrieve content types, those are:

1- SPWeb.ContentTypes which gets the collection of content types for the website.

When you are referring to an SPWeb object and need to go through the content types for that web, the SPWeb.ContentTypes property will only show you a list of content types that have been defined at that site level, not a full list of Content Types that the web has available to it.

and

2- SPWeb.AvailableContentTypes which gets the collection of all content type templates that apply to the current scope, including those of the current website, as well as any parent websites. Use the AvailableContentTypes property if you want to get the list of all content types that are available to the web (including all of those defined at sites above it in the site structure, all the way to the top of the site collection).

Because I wanted my code to retrieve the content type regardless where the content type was available (current web or root web / site collection level) I used SPWeb.AvailableContentTypes.

To illustrate this I created the following very simple C# console app which uses the SharePoint object model to move a custom content type from one group to another.

So the following code should return the content type and allow me to update it... but it won’t work...

using (SPSite site = new SPSite(demositeURL))

{

try

{

SPContentType contenttype = site.RootWeb.AvailableContentTypes["Demo Content"];

if (contenttype != null)

{

contenttype.Group = "Demo Content Types";

contenttype.Update();

}

}

catch (Exception e)

{

System.Diagnostics.Debug.WriteLine(e.Message);

}

}

If I run the code, SharePoint throws a “The collection cannot be modified.” Exception on contenttype.Update();

Thanks to Bernado Nguyen-Hoan I found that you have to ensure that the content type you are updating was not retrieved from the SPWeb.AvailableContentTypes collection. Content types retrieved from this collection (as oppose to SPWeb.ContentTypes) are read-only.

SPWeb.AvailableContentTypes has a non-public property called ReadOnly and its value is true. Therefore content types retrieved from this collection also have a non-public property Collection.ReadOnly – which is also true.

So if you need to update a content type, rather retrieve it from the SPWeb.ContentTypes instead of retrieving it from SPWeb.AvailableContentTypes.

If you modify the code example from above and change this line:

SPContentType contenttype = site.RootWeb.AvailableContentTypes["Demo Content"];

to:

SPContentType contenttype = site.RootWeb.ContentTypes["Demo Content"];

The code will execute successfully.

using (SPSite site = new SPSite(demositeURL))

{

try

{

SPContentType contenttype = site.RootWeb.ContentTypes["Demo Content"];

if (contenttype != null)

{

contenttype.Group = "Demo Content Types";

contenttype.Update();

}

}

catch (Exception e)

{

System.Diagnostics.Debug.WriteLine(e.Message);

}

}

Enjoy!!!!

Solved: SharePoint 2013 Fails to render images after upgrade

I recently upgraded a SharePoint 2010 custom solution to SharePoint 2013. One of the issues I came across was that on certain custom pages the images no longer displayed correctly. Instead of displaying an image, the page or control would be blank or the user would be prompted to download a file. The same code worked on a SharePoint 2010 farm and I could not find any obvious exceptions in the SharePoint logs.

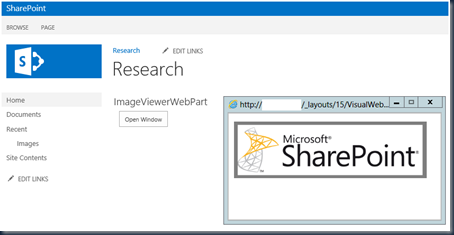

In order to illustrate the problem (and solution) I created the following simple custom project:

My example project contains a SharePoint 2010 Visual Web Part which contains one button. The button uses JQuery to show a custom page from the _Layouts folder. The custom page contains code-behind which will display an image from the current web “Images” library.

I tested the solution in Visual Studio 2010 and all worked fine. When the user click on the button, the custom page is shown and it renders the image as expected.

However when I build and deploy the same project in SharePoint 2013 the custom page does not show the image, instead the user is prompted to download a file.

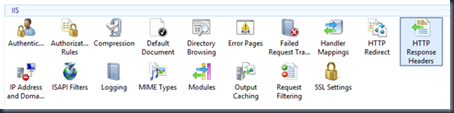

My colleague Hennie van Wyk quickly discovered that there is a difference between the SharePoint 2010 and SharePoint 2013 Web App’s HTTP Response Headers in IIS.

If you open IIS Manager and go to the HTTP Response Headers of the relevant SharePoint Web App you will see that in SharePoint 2013 two new host headers are introduced.

They are “X-Content-Type-Options” with a value “nosniff” and “X-MS-InvokeApp” with a value “1; RequireReadOnly”.

We further discovered that if we delete the X-Content-Type-Options response header the custom code worked and the images displayed on the custom page. I do not want to delete or disable standard SharePoint configuration and I certainly cannot expect my customers to do the same so I decided to investigate the possibility of changing the custom code so that it renders the new image regardless of the “X-Content-Type-Options” response header.

So, what is the X-Content-Type-Options response header?

I found a very helpful blog post by Mark Jones in which he explains that the X-Content-Type-Options response header with a value of “nosniff” is a directive from IIS that tells the browser to not sniff the MIME type. When this is disabled IE won't try to automatically determine what your content is. E.g. HTML, PNG, CSS, script, etc...

This means that it’s up to the developer to tell the browser what your content is. And, if what you are sending down isn't in IE’s allowable list it is going refuse to execute your content, hence the blank areas of the screen.

If the "nosniff" directive is received on a response retrieved by a script reference, Internet Explorer will not load the "script" file unless the MIME type matches one of the values”.

I also found the following great article written by Charles Torvalds.

Next step, let’s try to fix our custom code…

In the example SharePoint 2010 project which I developed for this blog post I had the following code in the Page_Load event of the custom page. This illustrates that when the page loads the code will retrieve the first item from the images library and render the image.

protected void Page_Load(object sender, EventArgs e)

{

SPList imagelist = SPContext.Current.Web.Lists.TryGetList("Images");

if (imagelist != null)

{

SPFile oFile = imagelist.Items[0].File;

byte[] img = oFile.OpenBinary();

Response.Clear();

Response.AddHeader("Content-Length", img.Length.ToString());

Response.ContentType = "application/octet-stream";

Response.BinaryWrite(img);

Response.End();

}

}

Note that the following line of code sets the response MIME type to “application/octet-stream”. Response.ContentType = "application/octet-stream";

The code worked fine in a SharePoint 2010 web application because in SharePoint 2010 there is no default X-Content-Type-Options response header with a “nosniff” value.

I changed the code to rather specify a Mime type of “image/png” and I deployed it to my SharePoint 2013 web application and everything worked fine.

protected void Page_Load(object sender, EventArgs e)

{

SPList imagelist = SPContext.Current.Web.Lists.TryGetList("Images");

if (imagelist != null)

{

SPFile oFile = imagelist.Items[0].File;

byte[] img = oFile.OpenBinary();

Response.Clear();

Response.AddHeader("Content-Length", img.Length.ToString());

Response.ContentType = "image/png";

Response.BinaryWrite(img);

Response.End();

}

}

This might be a very simple example of a potential serious challenge when you upgrade SharePoint 2007 or SharePoint 2010 solutions to SharePoint 2013.

In his blog post Mark Jones also mention examples where he found Javascript with a MIME type of “text/blank” which should have been “application/javascript” and in other cases JSON which was sent down as “application/json” when it should have been “application/javascript”. I can only imagine that there are hundreds of similar cases.

This might be a very simple example of a potential serious challenge when you upgrade SharePoint 2007 or SharePoint 2010 solutions to SharePoint 2013.

Solved: The web being updated was changed by an external process.

Today I had to troubleshoot SharePoint site provisioning code which misbehaved on a particular server. It is always fun if code works well on a dozen SharePoint farms and then fails on one farm.

The code is a really simple C# console app which reads an xml file and it then uses the taxonomy specified in the xml file to create a SP web.

As part of the provisioning, the code will activate a set of standard SharePoint features and then activate the custom developed features.

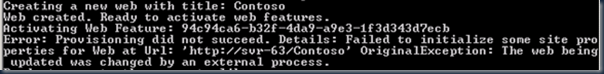

I used the application on many different SharePoint 2010 farms to provision over 30 sites but recently we ran the tool on a new farm and we suddenly started getting the following error when the code tries to activate the SharePoint Publishing Web feature:

Error: Provisioning did not succeed. Details: Failed to initialize some site properties for Web at Url: 'http://demo/Contoso' OriginalException: The web being updated was changed by an external process.

This is the original code – before I made changes:

Console.WriteLine("Creating a new web with title: " + webTitle);

site.AllowUnsafeUpdates = true;

SPWeb newweb = site.AllWebs.Add(webTitle, webTitle, webTitle, lcid, "STS#1", false, false);

site.AllowUnsafeUpdates = false;

Console.WriteLine("Web created. Ready to activate web features.");

if (newweb != null)

{

ActivateWebFeatures(newweb);

}

Console.WriteLine("Web created successfully");

I discovered that the SPWeb newweb = site.AllWebs.Add( call actually returns the SPWeb object before all provisioning has been completed so the next time I try to update newweb I get the error “The web being updated was changed by an external process.”

In order to solve this I fetched the new updated instance of the SPWeb, so I added the following line of code: newweb = site.OpenWeb(webTitle);

The following code works well:

Console.WriteLine("Creating a new web with title: " + webTitle);

site.AllowUnsafeUpdates = true;

SPWeb newweb = site.AllWebs.Add(webTitle, webTitle, webTitle, lcid, "STS#1", false, false);

site.AllowUnsafeUpdates = false;

Console.WriteLine("Web created. Ready to activate web features.");

newweb = site.OpenWeb(webTitle);

if (newweb != null)

{

ActivateWebFeatures(newweb);

}

Console.WriteLine("Web created successfully");

Take Control: Programmatically verify SharePoint Managed Properties

This blog post is relevant to the following common search error:

Property doesn't exist or is used in a manner inconsistent with schema settings.

If you develop a custom SharePoint 2010 solution which consumes the FullTextSqlQuery class chances are good that after deployment to a new farm you will come across the following error:

“Property doesn't exist or is used in a manner inconsistent with schema settings”

This problem occurs when your source code tries to execute custom search queries against a Search Service implementation in which the crawled properties, managed properties or property mappings which your code is dependent on, are not configured correctly.

This blog post will show you how to programmatically take control of search dependant implementations and I provide a source code example which you can use to verify that the correct dependencies in place.

Imagine you developed a web part which allows a user to provide criteria and then search the site collection or specific webs (depending on search scope) to return a specific set of field values for each result item.

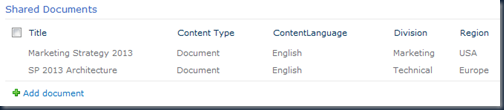

You have a document library:

The search code looks like this:

ResultType resultType = ResultType.RelevantResults;

string queryString = string.Empty;

try

{

FullTextSqlQuery fullTextSqlQuery = new FullTextSqlQuery(site);

fullTextSqlQuery.ResultTypes = resultType;

queryString = "SELECT Title,Division, Region, Language FROM SCOPE() WHERE FREETEXT(*, '*test* ') AND (\"SCOPE\" = 'Demo Site Scope') AND (\"Division\" = 'Technical')";

fullTextSqlQuery.QueryText = queryString;

ResultTableCollection resultTableCollection = fullTextSqlQuery.Execute();

ResultTable resultTable = resultTableCollection[resultType];

if (resultTable != null && resultTable.RowCount > 0)

{

while (resultTable.Read())

{

StringBuilder output = new StringBuilder();

output.Append("Title:" + resultTable["TITLE"].ToString());

output.Append(", Division:" + resultTable["Division"].ToString());

output.Append(", Region:" + resultTable["Region"].ToString());

output.Append(", Language:" + resultTable["Language"].ToString());

Console.WriteLine(output);

}

}

}

catch (Microsoft.Office.Server.Search.Query.QueryMalformedException querymalformedexception)

{

Console.WriteLine("Query syntax error: " + querymalformedexception.Message);

}

catch (Microsoft.Office.Server.Search.Query.ScopeNotFoundException searchscopeerror)

{

Console.WriteLine("Search scope error: " + searchscopeerror.Message);

}

catch (Microsoft.Office.Server.Search.Query.InvalidPropertyException invalidpropertyexception)

{

Console.WriteLine("Property error: " + invalidpropertyexception.Message);

}

You test the solution on your development server and everything works well but after you deployed to a QA or production environment your custom search code throws an error:

Property doesn't exist or is used in a manner inconsistent with schema settings.

The error does not contain information specific enough to help us identify which properties are not in place.

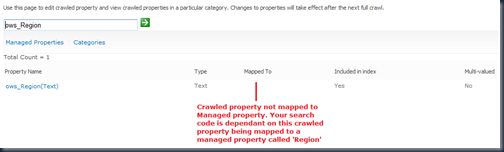

If you open SharePoint Central Admin –> Manage Service Applications –> Search Service Application –> Metadata Properties, we discover that some of the crawled properties which our search code rely on are not mapped to managed properties. In other cases some of the crawled properties do not even exist.

This is quite a common problem. Custom search code is dependent on specific configuration to be in place and there is always a risk during new deployments that either the provisioning code did not work properly or the SharePoint farm administrator did not configure the custom components correctly.

Obliviously we want to be SharePoint Heroes and see that our custom solutions works well after every new installation, so instead of relying on people or process let’s rather build a simple ‘success verification’ application which we can run on each new farm implementation to tell us whether all the dependencies are in place.

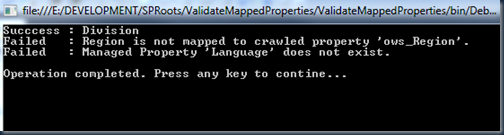

The source code from this post will generate the following output which will tell us exactly what the problem is:

Validate Mapped Properties (command-line tool):

This example was developed as a C# Console Application but you can use the code almost anywhere (perhaps a custom SharePoint configuration page is a good idea).

Add references to: Microsoft.Office.Server.Search and Microsoft.SharePoint

Add the following using statements:

using System;

using Microsoft.SharePoint;

using Microsoft.Office.Server.Search.Administration;

using Microsoft.Office.Server.Search.Query;

using Microsoft.Office.Server;

using System.Collections.Generic;

using System.Collections;

using System.Linq;

using System.Text;

I developed a function called ValidateMappedProperties which takes two input parameters. The one input parameter is the SPSite and the other input parameter is a list of MetadataProperty objects.

The ValidateMappedProperties function will iterate through the list of MetadataProperty objects and for each item query the SP Search Service to check whether the crawled property, managed property and property mapping are in place.

MetadataProperty class

First let’s look at the MetadataProperty class. This class allows me to instantiate a new MetadataProperty object and set the expected properties. It is not necessary for you to create such a class but it does make it easier to manage the input- and result operations.

namespace ValidateMappedProperties

{

class MetadataProperty

{

public MetadataProperty()

{

this.PropertySet = Guid.Empty;

this.MappedPropertyName = String.Empty;

this.MappedPropertyType = 0;

this.CrawledPropertyName = String.Empty;

this.Verified = false;

this.VerifiedMessage = String.Empty;

}

public MetadataProperty(Guid propertyset, string mappedpropertyname, Int32 mappedpropertytype, string crawledpropertyname)

{

this.PropertySet = propertyset;

this.MappedPropertyName = mappedpropertyname;

this.MappedPropertyType = mappedpropertytype;

this.CrawledPropertyName = crawledpropertyname;

this.Verified = false;

this.VerifiedMessage = String.Empty;

}

public Guid PropertySet { get; set; }

public string MappedPropertyName { get; set; }

public Int32 MappedPropertyType { get; set; }

public string CrawledPropertyName { get; set; }

public bool Verified { get; set; }

public string VerifiedMessage { get; set; }

}

}

ValidateMappedProperties function

Now let’s consider the ValidateMappedProperties function.

This function will query the Search Service Application for a list of crawled properties and a list of managed properties. It will then loop through the list of supplied MetadataProperty items and verify whether all the dependencies are in place.

public static void ValidateMappedProperties(SPSite site, List<MetadataProperty> managedproperties)

{

try

{

SPServiceContext serviceContext = SPServiceContext.GetContext(site);

SearchServiceApplicationProxy searchApplicationProxy = serviceContext.GetDefaultProxy(typeof(SearchServiceApplicationProxy)) as SearchServiceApplicationProxy;

SearchServiceApplicationInfo searchApplictionInfo = searchApplicationProxy.GetSearchServiceApplicationInfo();

SearchServiceApplication searchApplication = Microsoft.Office.Server.Search.Administration.SearchService.Service.SearchApplications.GetValue<SearchServiceApplication>(searchApplictionInfo.SearchServiceApplicationId);

Schema sspSchema = new Schema(searchApplication);

IEnumerable<CrawledProperty> _crawledProperties;

_crawledProperties = sspSchema.QueryCrawledProperties(string.Empty, 1000000, Guid.NewGuid(), string.Empty, true).Cast<CrawledProperty>();

ManagedPropertyCollection allprops = sspSchema.AllManagedProperties;

foreach (MetadataProperty property in managedproperties)

{

property.Verified = true;

property.VerifiedMessage = "Success";

var crawledProperty = _crawledProperties.FirstOrDefault(c => c.Name.Equals(property.CrawledPropertyName));

if (crawledProperty == null)

{

property.Verified = false;

property.VerifiedMessage = "Crawled Property '" + property.CrawledPropertyName + "' does not exist.";

continue;

}

if (!allprops.Contains(property.MappedPropertyName))

{

property.Verified = false;

property.VerifiedMessage = "Managed Property '" + property.MappedPropertyName + "' does not exist.";

continue;

}

try

{

bool hasmapping = false;

ManagedProperty mp;

mp = sspSchema.AllManagedProperties[property.MappedPropertyName];

List<CrawledProperty> mappedcrawledproperties = mp.GetMappedCrawledProperties(1000);

foreach (CrawledProperty item in mappedcrawledproperties)

{

if (item.Name == property.CrawledPropertyName)

{

hasmapping = true;

continue;

}

}

if (!hasmapping)

{

property.Verified = false;

property.VerifiedMessage = property.MappedPropertyName + " is not mapped to crawled property '" + property.CrawledPropertyName + "'.";

continue;

}

}

catch

{

}

}

}

catch (Exception ex)

{

Console.WriteLine("Error: " + ex.Message);

}

}

Request Method:

And, lastly the following code will illustrate how to define the list of expected MetadataProperty settings then call the ValidateMappedProperties function and then write the results to a console window:

static void Main(string[] args)

{

string siteURL = args[0];

List<MetadataProperty> managedproperties = new List<MetadataProperty>();

Guid guidPropset = new Guid("00130329-0000-0130-c000-000000131346"); //this is the SharePoint columns propertyset ID.

managedproperties.Add(new MetadataProperty(guidPropset, "Division", 31, "ows_Division"));

managedproperties.Add(new MetadataProperty(guidPropset, "Region", 31, "ows_Region"));

managedproperties.Add(new MetadataProperty(guidPropset, "Language", 31, "ows_ContentLanguage"));

using(SPSite site = new SPSite(siteURL))

{

ValidateMappedProperties(site,managedproperties);

foreach (MetadataProperty item in managedproperties)

{

if (item.Verified)

{

Console.WriteLine("Succcess : " + item.MappedPropertyName);

}

else

{

Console.WriteLine("Failed : " + item.VerifiedMessage);

}

}

}

Console.WriteLine("");

Console.WriteLine("Operation completed. Press any key to contine...");

Console.ReadKey();

}

The result will tell us where the problem lies:

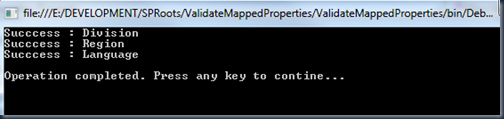

I can now use this information to make the necessary changes in Central Admin and run the tool again. I can repeat this process until I get success on all items:

Now that I know all the dependencies are in place I am certain that, my custom search solution will work.

The screenshot below shows the results produced by running the example search code:

Enjoy!!